scout and

scored are executed by the root user on a host machine in the

network, and user's execute scrun commands specifying the host

where scored is running. Thus, multiple users can execute

programs on the cluster based on the scheduling policies of scored

executed by the root user.

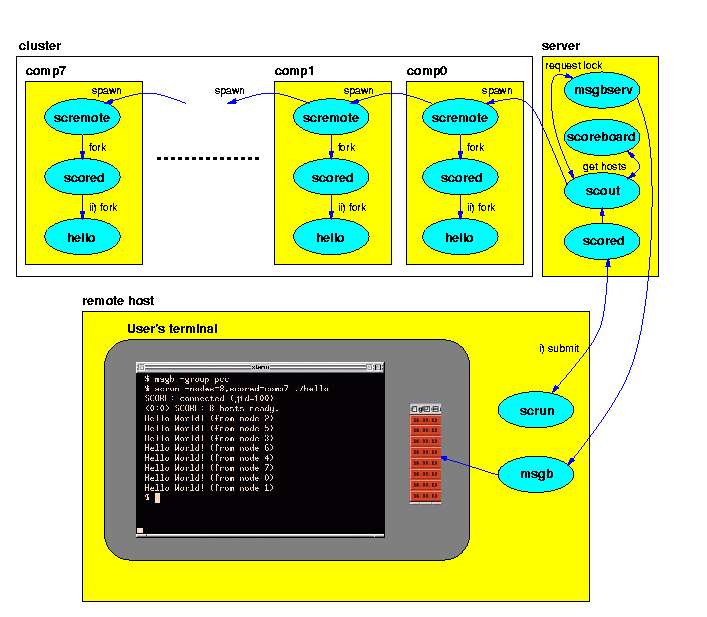

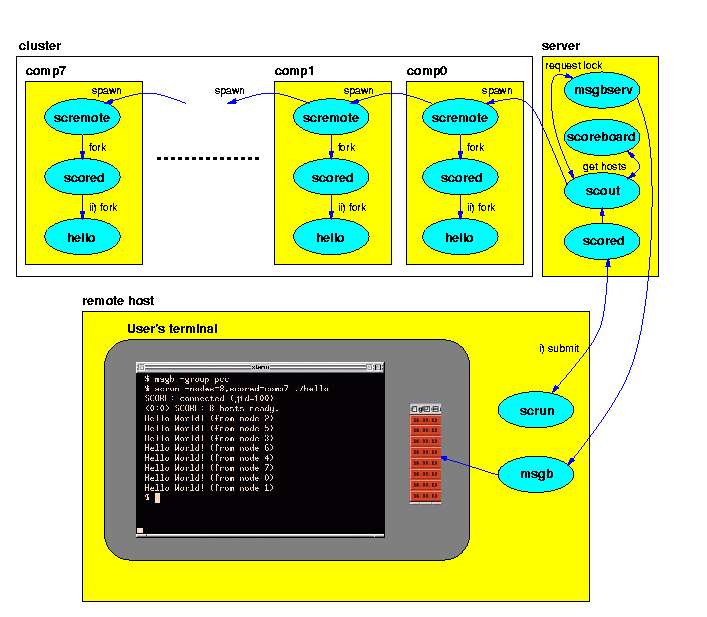

Suppose that you have a cluster of eight compute hosts, i.e., comp0

through comp7, and a server host running the Cluster Database

Server (scoreboard), the Compute Host Lock Server

(msgbserv), and root has started scout and

scored processes.

Here is an example session to execute the hello command on the

cluster:

$ msgb -group pcc $ scrun -nodes=8,scored=comp7 ./hello SCORE: connected (jid=100) <0:0> SCORE: 8 hosts ready. Hello World! (from node 2) Hello World! (from node 5) Hello World! (from node 3) Hello World! (from node 6) Hello World! (from node 4) Hello World! (from node 7) Hello World! (from node 0) Hello World! (from node 1) $The procedure to execute

hello is as follows:

scrun is issued by the user to execute the program.

It requests scored, running on host comp7

(the default host is the last host in the cluster group), to execute the

program on 8 of the compute hosts.

scored requests the scored processes running

on the cluster hosts to fork and exec the user's program which will

execute until completion. When the program completes, the user

processes are terminated.

It should be noted that the Compute Host Lock Server is continuously locked

by the scored process running on the server host. This disallows

other processes to lock the Compute Host Lock Server and execute their own

programs.

|

PC Cluster Consotium |